Is human thinking a kind of symbol manipulation? If it would be so, manipulating symbols would be sufficient for intelligence, and, this would also imply, that since machines manipulate symbols, they can be intelligent too. This idea is a well-known position of AI philosophy reaching back to 1976 when it was conceived by Allen Newell and Herbert A Simpson. Both thought of physical symbol-processing system as a necessary and sufficient condition of thinking 1. How does it stand ground over four decades later?

What is this tradition of explaining such

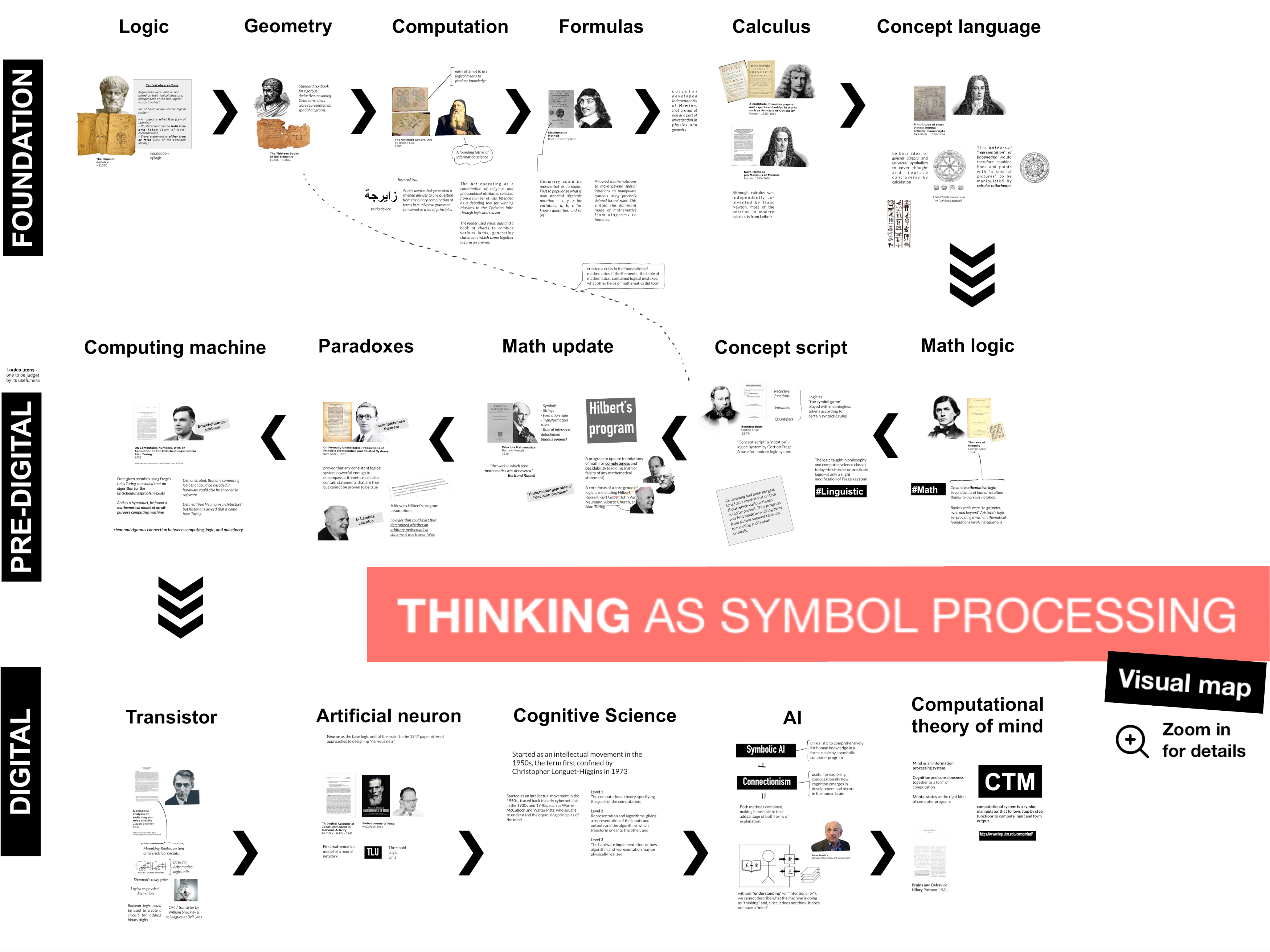

Let’s do something wild here and, for the sake of understanding, try to look at this highly criticized idea by revisiting not just four decades since its inception, but rather by looking from the more neckbreaking perspective of the millenia. After all, Newell and Simpson followed a thought tradition that can only be understood deeply when referring to the very roots of the ideas. It so happens that the roots bring us all the way back to Aristotle and Ramon Llull.

The tradition of defining nervous system and cognition as computation and signal processing reaches back to the very beginnings of the key scientific discoveries of logic and math. The concepts developed back then (and improved upon over time) are a set of useful tools today, allowing us to calculate a vast number of things; from building structures to winning chess strategies.

The history of calculations is the history of the computer and the ideas that lead to it, emerging from mathematical logic that, back in the 19th century was an obscure and cult-like discipline. A great resource for a brief history of those ideas can be found in an article by Chris Dixon, a TechCrunch writer and general partner at Andreessen Horowitz with a major in philosophy. 2

But the influences that got us to perceive brains as computers are rooted also in inception of information sciences, where a founding father figure is sometimes found in Ramon Llull, the Catalonian polymath and logician. Ramon, back in the fourteenth century, become obsessed with an Arab mechanical device which could provide a rhymed answer to any given question by a combination of symbols. Motivated by a pursuit of truth and knowledge, he developed a compendious system for posing and answering philosophical queries using a similar concept with the inclusion of some simplified causations. Llull’s fascination in ‘computing answers’ was carried on by his scientific descendants, including such prominent figures as Giordano Bruno and Leibniz, an inventor of algebra, who admired universal ambition and the clever combination of Llull’s methods. As a result Leibniz developed a further system of manipulating universal symbols 3 that only later turned into bits and bytes when math crossed paths with electrical circuits.

This happened thanks to Claude Shannon who not only mapped Boole’s logical system onto electrical circuits but also developed a mathematical theory of communication. Computing answers suddenly became possible in the digital realm and laid the foundation for using computerized symbol manipulation as a metaphor of thinking. After all, a series of bits as symbols seems somehow similar to the firing of neurons, so all which was needed to connect computers with cognition was a mathematical representation of the singular unit of our neural network: the neuron. The next breakthrough came quickly from Warren McCulloch and Walter Pitts, who provided a solution 4.

The remaining part of the story is related to post-war computing, much better recognized today thanks to the celebrity-like status of Alan Turing, who defined the notion that carries his name, the so-called “Turing machine”, which further equalized digital computation with information processing.

Turing and Alonzo Church’s cooperation opened the door to coin the Computational Theory of Mind (CTM in short) which directly claims, in short, that the mind is a kind of computer. Computationalism (used interchangeably with CTM) to date remains a research program, helpful in creating and testing theories and individual models on cognition with an emphasis of manipulating representations.

It’s hard not to notice the recent support coming from the promising trends of Machine Learning. The results of artificial neural nets used in Machine Learning display a set of properties which seem to narrow the gap between biological cognition and the results of

So where does this all take us? Brains seem to process information organized in some soft of a structural content, however we still don’t know how processing occurs and what sort of computation is used in ‘the biological circuits;’ digital, analog or hybrid? And what representations are used?

Most cognitive scientists put forth that our brains use some form of representational code that is carried in the firing patterns of neurons. Computational accounts seem to offer an easy way of explaining how our brains carry and manipulate the perceptions, thoughts, feelings, and actions that make up our everyday experience. While most theorists maintain that representation is an important part of cognition, the exact nature of that representation is highly debated.

What is certain however, is that the rush to discover some answers is currently a highly funded venture taking in both private and public investments. 6

Seen from the perspective of the evolution of ideas, the pace of progres is striking. We invented logic roughly 2300 years ago and, 1500 year later, we realized the utility of computation. Calculus was invented only 350 years ago, and math as a discipline experienced a course correction only 100 years ago, fixing mistakes left by its pioneers. Computing machines which utilize math were invented only 80 years ago. An artificial neuron has existed for 75 years, but the improvements that lead to its useful implementation took place only recently. The scientific field of Cognitive Sciences aimed at understanding the organizing principles of the mind was coined only 68 years ago. The breakthroughs which allow us to make use of artificial neural nets to model cognitive processing occured only over the past 10 years.

On the scale of time, the use of computers to simulate and understand cognition has taken place so abruptly that we might discover that use of the computer metaphor for cognition is a mistake similar to the XIX century misleading use of the mechanistic metaphor to explain the inner workings of the human body. Some philosophers of cognitive science already believe that the term ‘the computational theory of mind’ is a misnomer 7 binding a together series of methodological and metaphysical assumptions shared by particular (and sometimes conflicting) theories that, together, compose the core of cognitive science and early efforts to model the brain (known as computational neuroscience). It might be that we’re living a world of modern methaphysics and, to understand this, time is needed and with it, the proper perspective.

It is known as the physical symbol system hypothesis (PSSH)↩

The difference in Chris Dixon approach was to put in the center the ideas and philosophical influences rather than the evolution of hardware which is usually the basis of historical discussions related to computers. Read the entire piece at The Atlantic↩

The work that laid ground for abstract representation being a foundation for all further notations, including computer languages, was titled “Dissertation on the Art of Combinations”↩

See their famous article “A Logical Calculus of Ideas Immanent in Nervous Activity” ↩

We’re fine not to comperhend entirely why one plus one equals two. Curiously it took roughly three hundred pages of Bertrand Russell’s 1910 Principia Mathematica to explain that. So even if we’re certain of a result of summing two numbers the ‘knowing’ of multiplication table poses some serious epistemological challenge replacing certainty for less rigid (yet still reliable) ‘knowing how’ ↩

In a subsequent article we’ll review which research centers are actively working on next breakthrough trying to model brains – and what is their outlook↩

See “From Computer Metaphor to Computational Modeling: The Evolution of Computationalism” by Marcin Miłkowski and, also his, definition of Computational Theory of Mind on the Internet Encyclopedia of Philosophy↩